Maximizing SASE and Zero Trust Performance: The Vital Role of ‘At Scale’ Distributed Enforcement

Network security deployment architectures undergo regular evolution, driven by various enterprise requirements. Some of the Industry recent trends are outlined below:

- Minimizing the cost & maintenance burden associated with disparate and multiple network security systems.

- Implementing a ‘Zero Trust Everywhere’ mandate that encompasses all networks within Data Centers, across WANs, and within Kubernetes clusters.

- Ensuring high performance (throughput, reduced latency, and minimal jitter) to enhance user experience and support real-time applications like WebRTC.

- Catering to the needs of a distributed workforce.

- Meeting the demands of remote workforce.

- Increased adoption of SaaS Services by enterprises.

- Growing reliance on Cloud for enterprise applications.

- Embracing Multi-Cloud deployments.

- Exploring the potential advantages of Edge and Fog computing.

- Difficulty in training or acquiring skilled personnel in network and security roles.

- Minimizing resources required for managing last mile Internet connectivity for distributed offices.

Legacy network & security architecture

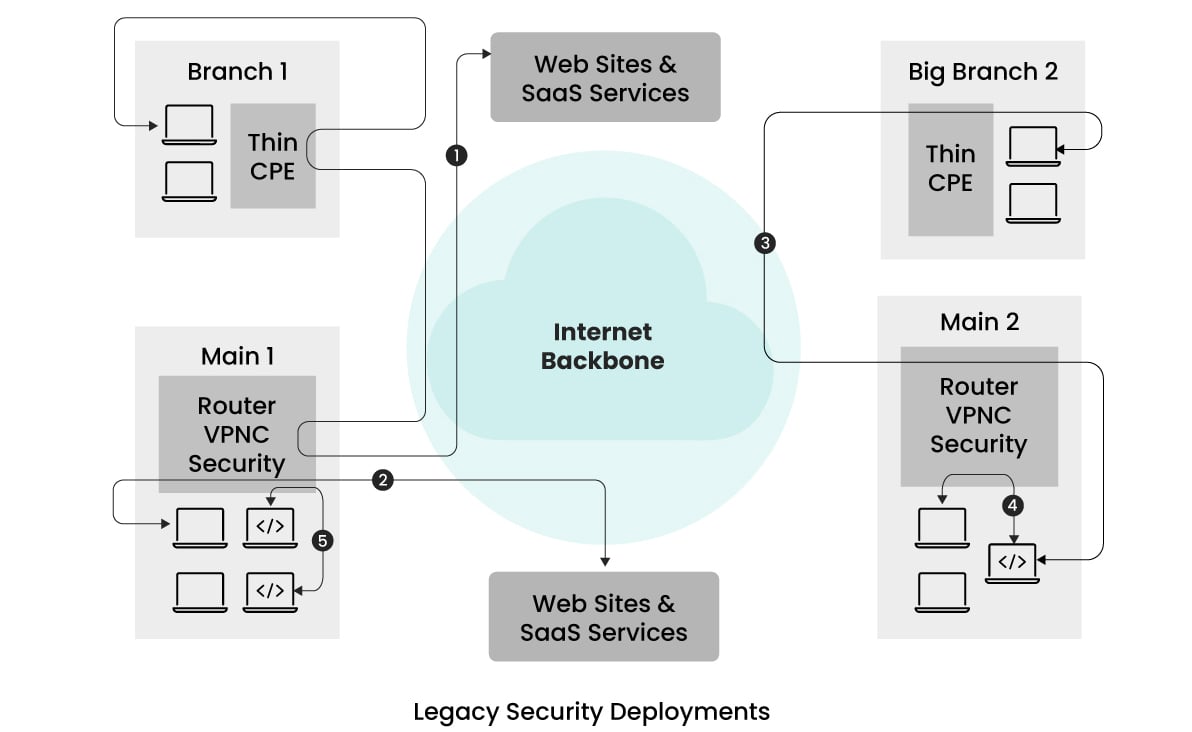

Enterprises with multiple offices and remote workforces traditionally allocated substantial resources to procure and maintain their networking and security infrastructure. The simplified illustration below showcases the typical architecture previously adopted.

This example features an enterprise with two branch offices and two main offices situated across different parts of the world. In an effort to reduce expenses on security appliances and alleviate management overhead, all security enforcement is centralized at the main offices. Traffic from the branch offices and remote users is routed to the nearby main offices for security enforcement before proceeding to its intended destination. ‘Thin CPE’ devices are typically deployed in the branch offices to facilitate this traffic tunneling.

The main offices are equipped with VPN concentrators to terminate the tunnels, multiple security devices for detecting and removing exploits, implementing granular access controls, shielding against malware, and preventing phishing attacks.

However, as illustrated in the traffic flows (flows represented by 1 in the picture), traffic from branch offices to Internet sites follows a hair-pinned route. This routing can result in increased latency, consequently leading to suboptimal user experiences, especially if the branch and nearby main offices are situated a considerable distance apart. Some enterprises attempted to enhance throughput and reduce jitter by employing dedicated links, but these solutions were costly and failed to adequately address latency issues.

Moreover, managing multiple network and security devices, each with its unique configuration and analytics dashboard, poses challenges. This complexity can result in error-prone configurations due to the need for personnel to be trained on multiple interfaces.

These legacy security architectures often overlooked zero-trust principles, relying more on network segmentation and IP addresses rather than providing user-based access control contingent upon user authentication. While this approach functioned effectively at that time, the rise in remote work scenarios and extensive use of NAT (Network Address Translation) made user identification via IP addresses increasingly challenging. Consequently, this strain started revealing limitations in legacy architectures.

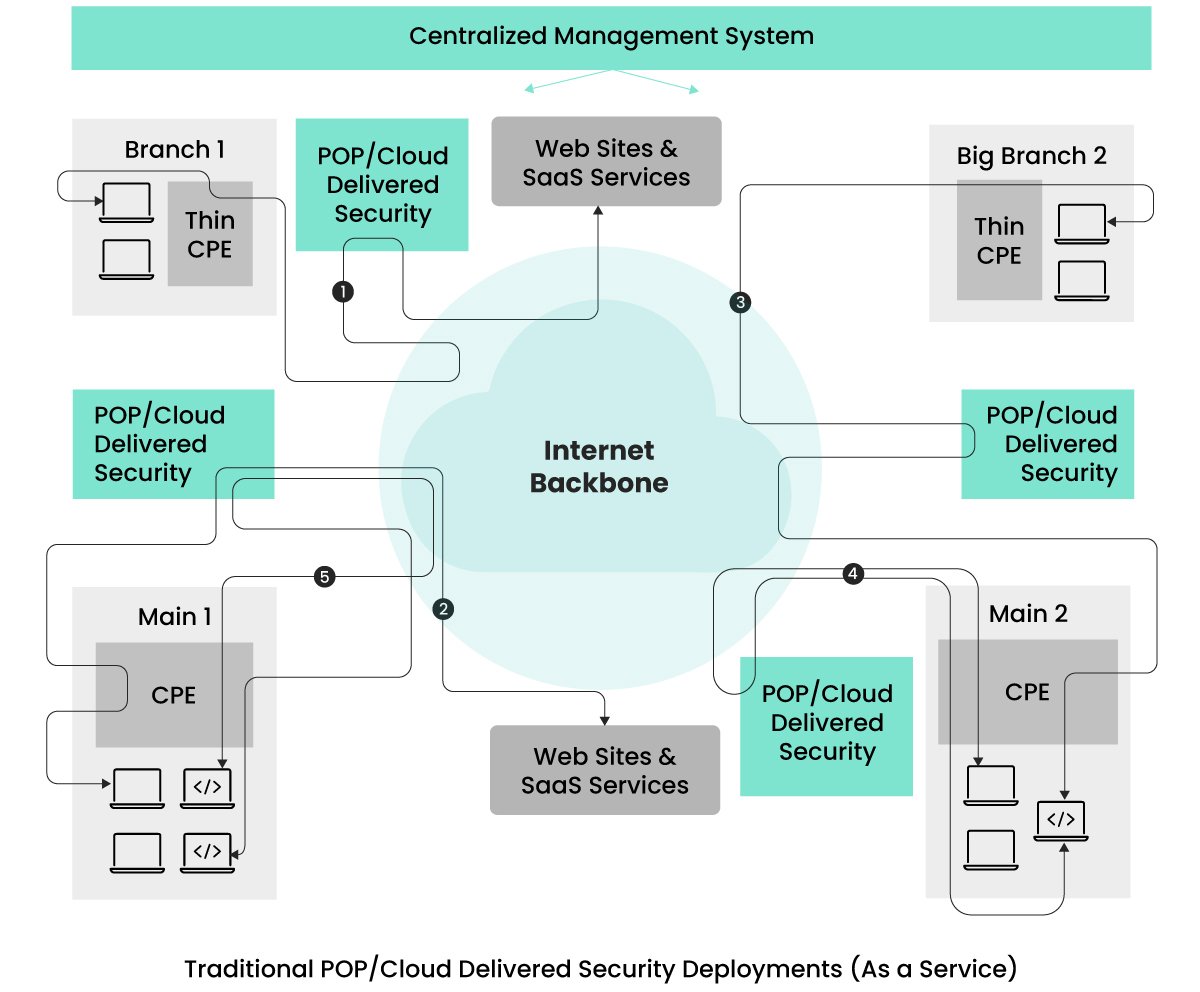

Cloud/POP Delivered Security

In response to challenges related to maintaining on-premise security infrastructure, accommodating the demands of remote work, reducing latency associated with traffic hair-pinning, and the amplified usage of cloud and SaaS services, cloud-based security solutions have emerged as a promising alternative. Many enterprises have begun leveraging these cloud-delivered security solutions to address these issues as shown in the below picture.

Security services are distributed through various Points of Presence (POPs) by the provider. Enterprises have the flexibility to select the POP locations based on their office placements and the concentration of their remote workforce. Client traffic destined for any location is routed through the nearest POP where security enforcement is applied. This routing is facilitated using CPE devices in offices and VPN client software on remote user devices, reducing latency associated with legacy security architecture due to the proximity of the POP locations.

Initially, the industry saw security services from multiple vendors, but there’s been a shift toward single-vendor, unified technology-based cloud security services. These unified services offer streamlined security configuration and observability management, addressing the fragmented security management of legacy deployment architectures.

While this setup performs well for traffic destined for the Internet/SaaS sites, it introduces a new challenge when both communicating entities are in the same site, data center, or within a Kubernetes cluster, as seen in flows (4) and (5). Flow (4) depicts traffic between entities within the same site being tunneled to the nearest POP location and then routed back. This is done to enforce security measures on all traffic flows, meeting zero-trust requirements. Flow (5) illustrates communication between two application services, which, to meet zero-trust demands, is routed out of the site to the nearest POP for security processing before returning to the same Kubernetes cluster. This process inadvertently increases latency for these flows and may expose traffic to additional entities unnecessarily.

Some Enterprises avoid this latency by deploying different on-premises security appliances, yet this reintroduces challenges associated with legacy deployment architectures.

Another challenge with POP delivered security is related to performance isolation. As these providers provide service to multiple customers/tenants, there are instances of performance challenges related to noisy neighbors. That is, other companies’ traffic volume can impact your company’s traffic performance. This is due to sharing of same execution context for traffic coming from multiple companies.

As Enterprises seek solutions that meet zero-trust requirements, offer unified policy management, provide performance isolation and mitigate unnecessary latency from hair-pinning, the network security industry has evolved its deployment architectures to address these concerns. Unified Secure Access Service Edge (SASE), provided by companies like Aryaka, has emerged to play a pivotal role in this landscape.

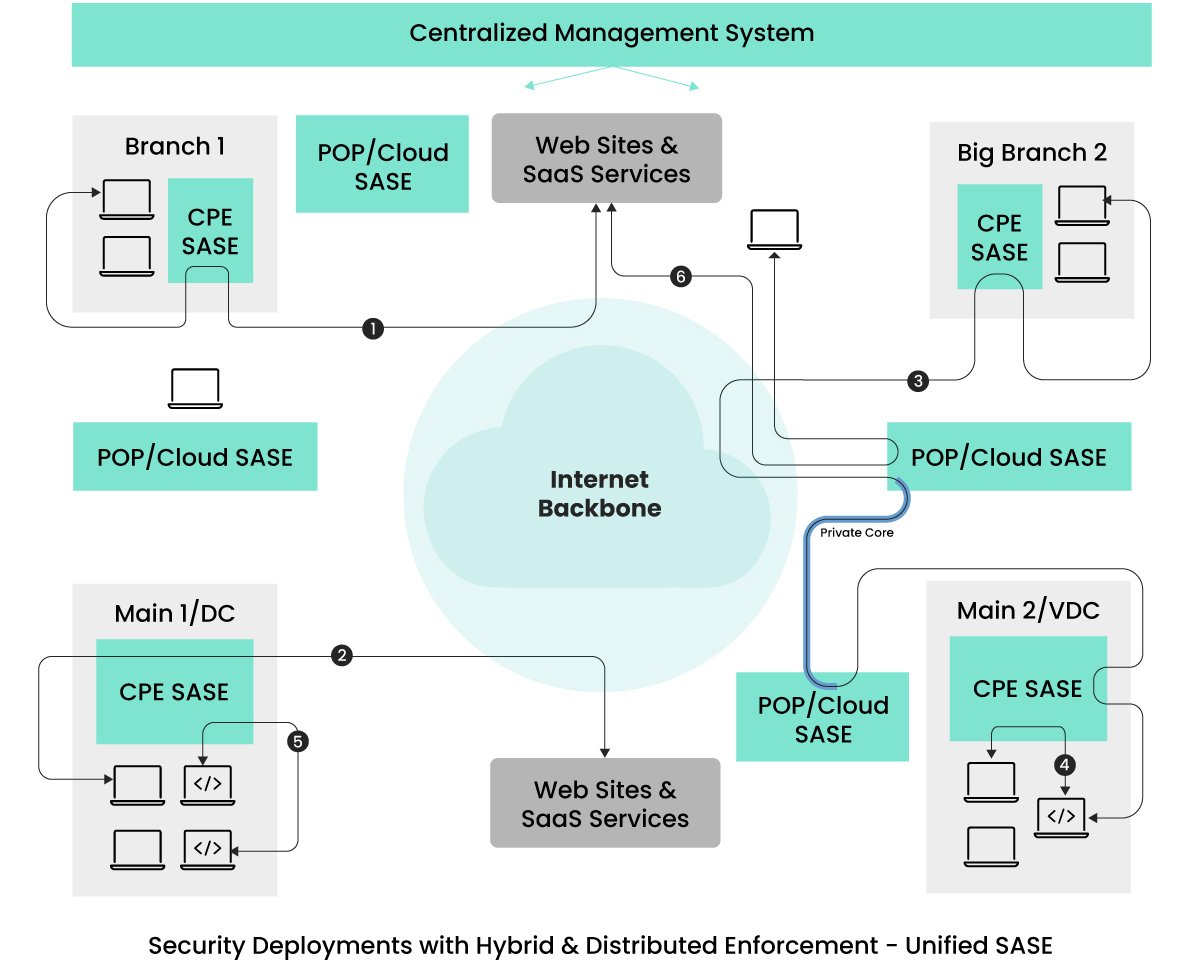

Unified SASE with Hybrid and Distributed Security Services

An ideal architecture is one that alleviates the management complexities found in previous architectures, meets stringent zero-trust requirements, ensures an equal level of security for both North-South and East-West traffic flows, offers unified policy management, provides performance isolation, and circumvents the latency issues linked with hair-pinning.

Unified SASE from companies like Aryaka provide architecture that meets many of the above requirements. Please see the below picture.

Within the depicted architecture, network and security enforcement extend beyond POP/Cloud locations to encompass CPE devices, all provided and uniformly managed by the same service provider. This strategy alleviates customer concerns about the maintenance of these CPE devices.

Only when these devices encounter traffic overload will new traffic be directed to nearby POP locations for security enforcement. The volume of traffic rerouted to the POP locations depends on the selected CPE device models, reducing the fallback traffic.

Regarding traffic from remote access users (depicted as 6), it follows a similar path to previous architectures. Traffic from remote user devices undergoes network and security processing at the nearest POP location before reaching its intended destination.

Flows (1) and (2) within this architecture bypass the POP locations, eliminating even minimal latencies present in cloud-delivered setups.

Moreover, flows (4) and (5), contained within a site, not only circumvent latency overheads but also reduce the attack surface. This local security enforcement is essential for real-time applications like WebRTC, sensitive to latency issues.

For application services in different sites necessitating deterministic, low-jitter performance, some SASE providers offer dedicated connectivity among sites via the nearest POP locations. Flow (3) exemplifies traffic flows benefiting from dedicated bandwidth and links, bypassing the Internet backbone to achieve deterministic jitter.

It’s important to note that not all unified SASE offerings are identical. Some SASE providers still redirect all office traffic to the nearest POP locations for network optimizations and security. Although they may mitigate latency overheads with multiple POP locations, any reduction in latency proves advantageous for Enterprises, especially in anticipation of potential low-latency application needs. Enterprises will likely seek solutions that prioritize user-friendliness, high-grade security without compromising performance or user experience. Given the need for low jitter across offices, Enterprises should consider providers offering dedicated virtual links among offices that bypass the Internet backbone.

Another crucial factor for Enterprises is selecting providers that manage maintenance and software updates for SASE-CPE devices. In legacy models, Enterprises bore the responsibility for upgrades and replacements. To avoid this concern, Enterprises should consider service providers that offer comprehensive managed white-glove services covering the entire SASE infrastructure lifecycle, encompassing tasks such as Customer Premises Equipment (CPE) device management, configuration, upgrades, troubleshooting and observability. These providers serve as one-stop shops, handling all aspects of network and security, while also offering co-management or self-service options for enterprises.

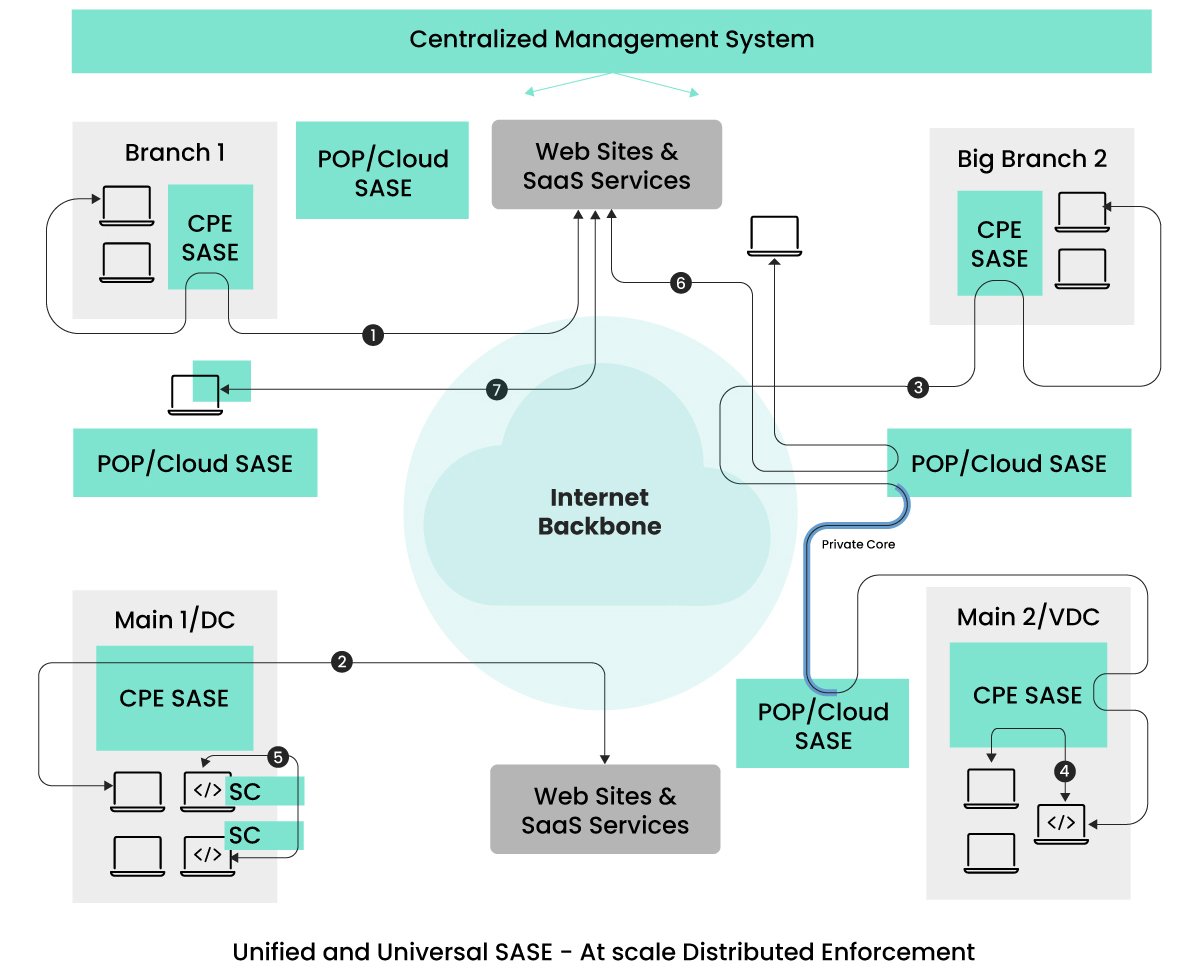

Looking Ahead – Universal and Unified SASE

Although the existing architectural framework, ‘Unified SASE with Distributed Security,’ satisfactorily fulfills several initial requirements detailed in the article, additional strategies exist to enhance application performance without compromising security integrity. As we look to the future, we anticipate advancements in security enforcement and traffic optimization beyond POPs and the Edge of the offices. These new architectural approaches will target flows (5) and (7), specifically aimed at improving the latency aspect of performance.

As illustrated in the picture, the concept of implementing security and optimization universally, particularly at the origin of the traffic, can yield optimal performance.

Key traffic flows to note are depicted as (5) and (7).

The prevalence of micro/mini-service-based application architectures is becoming standard in the application landscape. These mini-services can be deployed within Kubernetes clusters, spread across clusters within data centers, or across different geographical locations. In scenarios where communicating services exist within the same Kubernetes cluster, it’s advantageous to ensure that the traffic remains within the cluster, allowing for the application of security and optimization directly within the Kubernetes environment. Kubernetes facilitates the addition of sidecars to the PODs hosting the mini-services. We anticipate SASE providers will leverage this feature in the future to deliver comprehensive security and optimization functions, meeting zero-trust requirements without compromising performance.

Opting for a single universal SASE provider grants enterprises a unified management interface for all their security needs, regardless of the placement of communicating services. Flow (5) in the picture depicts security enforcement via side-cars (SC). It’s important to note that sidecars are not novel in the Kubernetes world; service mesh technologies use a similar approach for traffic management. Some service mesh providers have begun integrating threat security, such as WAAP, into the sidecars. Considering that services can communicate with the internet and other SaaS services, comprehensive security measures are essential. Consequently, it’s foreseeable that there will be a convergence of service mesh and SASE in the future, as enterprises seek a comprehensive and unified solution.

The traffic depicted as (7) is a consideration many SASE providers are exploring, which involves not only having VPN client functionality but also performing SASE functions directly on the endpoint. Extending SASE to the endpoints helps bypass any latency concerns associated with POP locations. As endpoints grow in computational power and offer improved controls to prevent service denials from malicious entities, it has become feasible to extend SASE enforcement to the endpoints.

While service providers handle the maintenance burden on the SASE side, enterprises may still need to ensure that implementing SASE on the endpoints does not introduce new issues. Therefore, enterprises seek flexibility in enabling SASE extension to endpoints specifically for power users.

Closing Thoughts

This article has illustrated the evolution of network and security, charting a course from legacy systems to modern cloud-delivered solutions, Unified SASE with distributed enforcement, and prospective future architectural designs.

It’s paramount to recognize the variances in solution offerings. Enterprises must conduct thorough evaluations of service providers, seeking ‘as-a-service’ solutions that cover distributed enforcement across PoPs and CPE devices, encompassing OS and software upgrades, while maintaining consistent management practices that uphold both security and performance.

In addition, it’s critical for enterprises to prioritize managed (or Co-managed) services from a single provider. These services should not only provide Internet connectivity solutions with advanced technical support but also rapidly adapt to new requirements and address emerging threats. Opting for managed service provider that use as-a-service from other SASE vendors can lead to complications in future enhancements, entailing prolonged negotiations between managed service and technology providers. Given the imperative need for swift resolutions, I believe that Unified SASE solutions with comprehensive managed service offerings from one provider stand as the ideal choice for enterprises.

-

CTO Insights blog

The Aryaka CTO Insights blog series provides thought leadership for network, security, and SASE topics. For Aryaka product specifications refer to Aryaka Datasheets.

- Cutting Through the SASE Confusion

- Enterprise Networks at an Inflection Point: The Motivations for SASE

- Managed Multi-Cloud Connectivity and SASE

- Demystifying SASE Adoption

- Fully Managed SASE for Better TCO – Check Point & Aryaka

- Re-Defining Hybrid Workplace with SASE and a Cloud-First Solution

- Re-Defining VPN with SASE and a Cloud-First Solution

- Which SASE is right for you?