How Latency, Packet Loss, and Distance Kill Application Performance

Latency, packet loss, distance, and application performance. What do all these terms have to do with each other?

If you manage IT networks for a global enterprise, it’s important to step back and look at big picture, so you can more clearly see how they all impact one another.

This may sound like “Networking 101” to some of you, but it’s critical to understand the relationships between these terms and their combined impact on application performance.

Definitions:

- (Network) Latency is an expression of how much time it takes for a packet of data to get from one designated point to another.

- Packet loss is the failure of one or more transmitted packets (could be data, voice or video) to arrive at their destination.

- Distance is the intervening space between two points or, for the sake of enterprise networks- two offices.

- TCP (Transmission Control Protocol) is a standard that defines how to establish and maintain a network conversation via which application programs can exchange data.

The Big picture:

When there is distance between the origin server and the user accessing that server, to complete a task the user needs a reliable network to connect. This network may be a private network, like a point-to-point link or MPLS. It may also be public, typically over the Internet. If the network has packet loss, the overall throughput between the server and the user significantly reduces with increasing distance. This means that the further away the user is from the origin server, the more unusable a network becomes.

Why is that?

The main culprit is TCP (Transmission Control Protocol), the standard that defines how to establish and maintain a network conversation via which application programs exchange data.

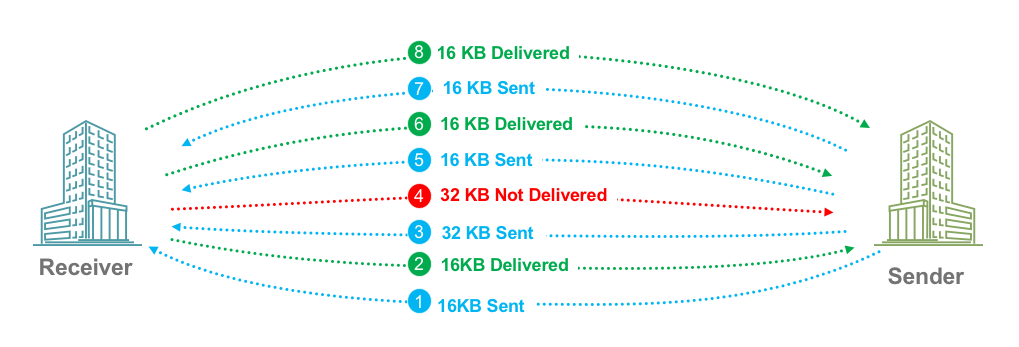

TCP is the protocol or mechanism that provides reliable, ordered and error-checked delivery of data between servers and users across a network. TCP is a good guy and helps with data quality. It’s also a connection-oriented protocol, which means a data communication mode in which you must first establish a connection with a remote host or server before any data can be sent.

The next step after the establishment of a TCP connection is to establish flow control to determine how fast the sender can send data and how reliably the receiver can receive this data. Depending on the quality of the network, the flow will be determined by window sizes negotiated from both ends. The ends may disagree if the client and the server view the network’s characteristics differently.

This has a major impact on application performance!

Certain applications like FTP would use a single flow and scale to the maximum available window size to complete the operation. However windows-based applications tend to be more ‘chatty’ and need multiple back and forth to get the operation(s) completed.

The simplistic model to consider:

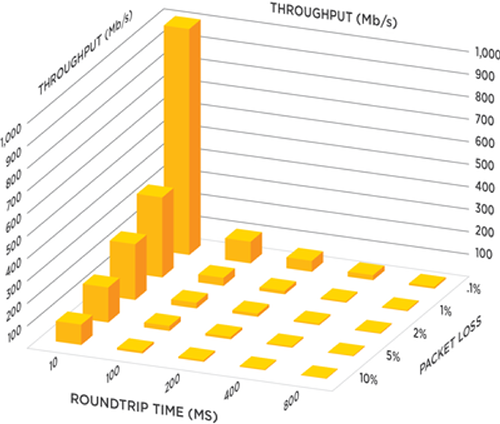

Network + Packet Loss + High Latency = Application Performance for TCP Applications.

In fact, looking at the graphic on the maximum throughput one can achieve, you wonder how organizations get any collaboration across long distances at all.

Maximum TCP Throughput with Increasing Network Distance

Voice and Video perform poorly when there is packet loss, especially over long-distance Internet links. However, even minimal packet loss combined with latency and jitter will make a network unusable for real-time traffic. Why? Because these applications run over UDP (User Datagram Protocol).

Unlike TCP, the good guy who polices all interaction, UDP couldn’t care less. UDP is connectionless with no handshaking prior to an operation, and exposes any unreliability of the underlying network to the user. There is no guarantee of delivery.

Here is the path most organizations with a global user base and growing application performance issues tend to take.

- Focus on Internet links. Buy more bandwidth. Throughput typically increases somewhat but not enough to fix the issue.

- Upgrade to MPLS links. Wait for 6-9 months for deployment. Realize that the problem has not been solved for long-distance connections.

- Consume more and more and more bandwidth. Deploy QoS to deal with congestion and its impact on real-time traffic. Voice and Video do okay, assuming enough bandwidth is configured.

- Realize that you can’t afford to keep buying more bandwidth at this alarming rate.

- Add WAN Optimization appliances. With TCP optimization, compression of data and application proxies, it does address the issues of throughput.

- See prices skyrocket to manage and maintain WAN Optimization hardware, and then experience sticker shock when it’s time to refresh those appliances.

- Consider your options. Cloud Services? Mobility?

- Revisit your entire enterprise network design. Vow to transform that network. Plan for the Cloud and for Mobility. Account for Big Data and your growing needs. Accommodate acquisitions and business changes.

And how would you do that? If you know that the status quo is broken, you also know that the traditional hardware vendors are trying to squeeze every last red cent out of those boxes before their business model becomes completely outdated.

Aryaka is the world’s first and only global, private, optimized, secure and Managed SD-WAN as a service that delivers simplicity and agility to address all enterprise connectivity and application performance needs. Aryaka eliminates the need for WAN optimization appliances, MPLS and CDNs, delivering optimized connectivity and application acceleration as a fully managed service with a lower-TCO and quick deployment model.

We invite you to learn more by contacting us today, or download our latest data sheet on our core solution for global enterprises.

- Calypso improved Microsoft SharePoint and Exchange performance by up to 13x

- Bajaj Electricals Enhances VoIP and Web-Conferencing Application Performance

- ThoughtWorks Chooses Aryaka SmartConnect for Faster Application Performance

- Xactly Replaces MPLS, Achieves 6x Faster RDP Performance

- Schenck Process Deploys Unified, Global Network and Solves Global Application Performance Issues

- NVIDIA Experiences 80% Faster Application Performance

- Global Retailer Displaces MPLS to Achieve 20X Faster App Performance

- Enterprise Professional Services company Improves App Performance

- Here’s What No One Tells You About Application Performance

- SD-WAN Solutions for Office 365 Application Performance

- How Latency, Packet Loss, and Distance Kill Application Performance

- How SD-WAN Can Improve Office 365 Application Performance 20x

- SD-WAN Solutions for Office 365 Application Performance

- How to Improve Application Performance for the Remote and Mobile Workforce

- How SD-WAN Can Improve Application Performance for Global Enterprises

- Are SaaS Cloud Applications Slowing You Down?